Monolith Modernisation, Part 1: A Dysfunctional Monolith

In this article, I explore how a dysfunctional monolith originates, which organizational aspects play a role, and which high-level strategies can get you out of this dreadful place!

In my early days as a co-founder, things started pretty simple in software architecture land: front-end, back-end, and database, all in a properly set up pipeline that allowed us to go fast without breaking too much.

We did not have a clear upfront functional scope, as we were building the product in parallel with the discovery of the market and the client’s challenges. Still, new features were built fast, often under time pressure. Our ambitious and young team took up the challenge!

Growing a Dysfunctional Monolith and Trying Something Better

Over time, things deteriorated… Adding new features became cumbersome and updates to the frameworks we used became more & more expensive, slowing us down. The morale of people diminished as well, it was not fun anymore to work in the codebase. With our growth came the dreaded monolith.

So when adding a new big component in the architecture, we changed a few foundational elements in the architecture, working in a test-driven approach and developing a more modular hexagonal architecture, allowing easier evolutions, simpler bug fixing, and faster development. We obtained a better developer experience and continuously aligned with recent versions of dependencies. Long story short: we delivered better software faster.

This of course leaves the question of how to evolve the old codebase: everybody wants better software faster, across the entire application and code base.

In a series of articles, I’ll dive deeper into this common challenge among software crafters, product owners/managers, and technical leaders in organizations small & large. In this part 1 of (probably) 4, I’ll focus on the reasons applications become harder to work with, and the organizational aspects to tackle before starting the journey. I'll also briefly introduce the strategic options on the table. In subsequent parts, I will dig deeper, covering the more technical aspects and implementation tactics.

What Makes an Application Dysfunctional (to a Certain Degree)?

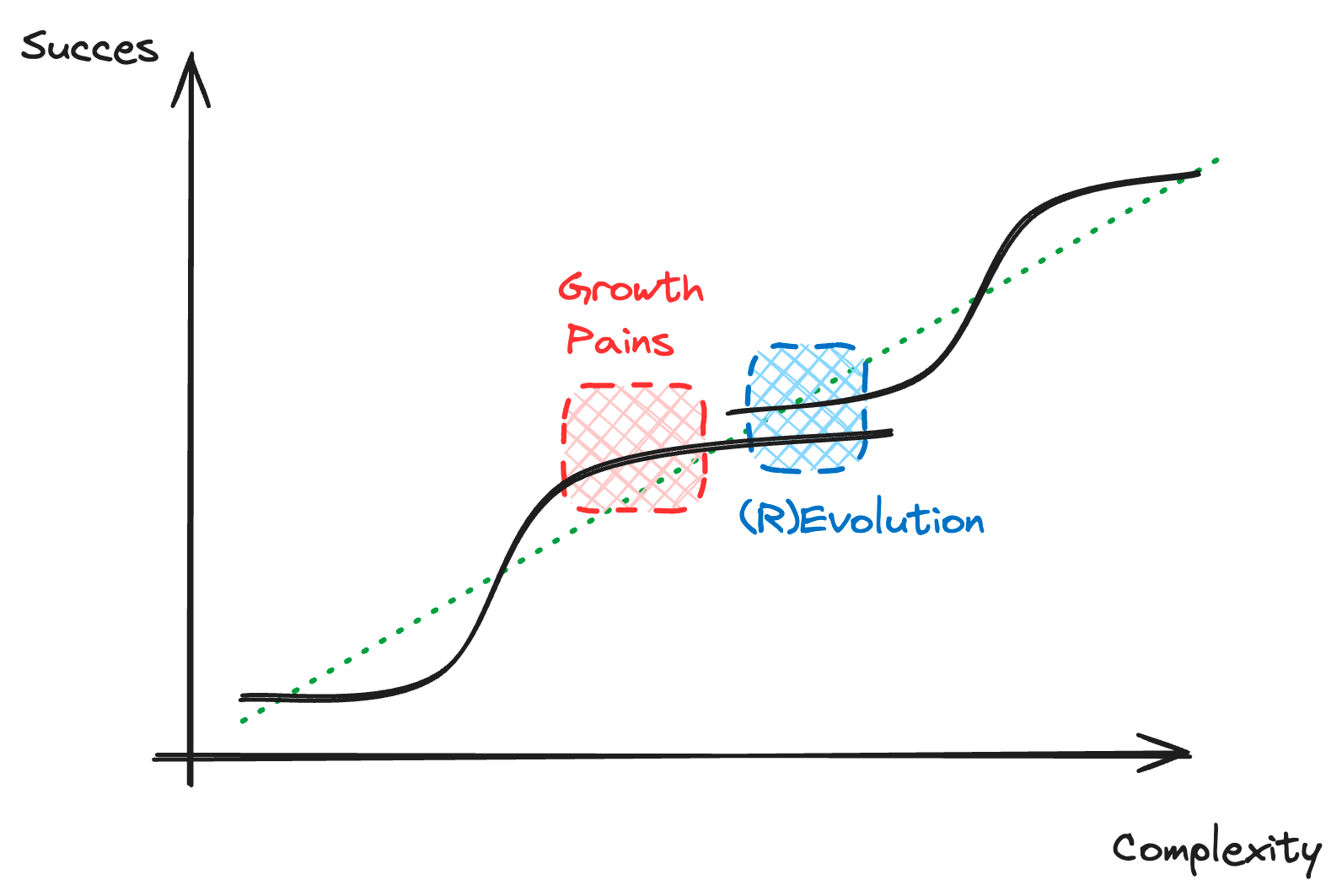

First of all: it is normal that an architecture becomes “unfit” after a while. What works well for 2 developers and 5 clients, will not work for 20 developers and 500 clients. And vice versa: building stuff as if you have 50.000 clients while you only have 5 is an utter waste of resources.

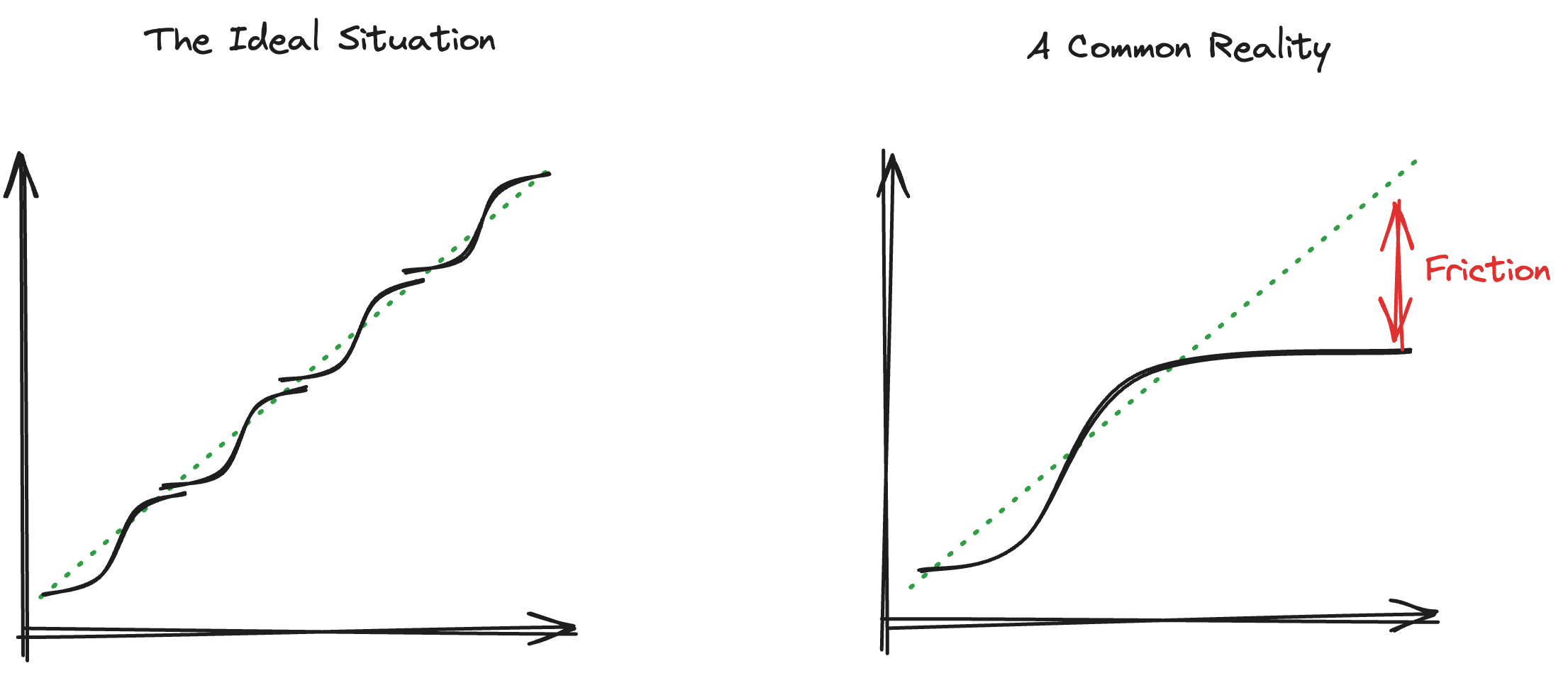

Indeed, a success story is really an accumulation of trajectories with growth pains. As a team builds more features (growing complexity), they lay the foundation of success. Which may (or may not) come. When it does, it will create friction: the software is not able to follow as fast as needed… which will at some point require changing some (foundational) assumptions: it is time for a (r)evolution.

Now what are the frictions that are indicators that it is time to evolve?

They typically come via 3 channels: unhappy clients, unhappy business, or an unhappy team.

Bugs that do not get fixed, regressions with new releases, performance issues, or a functional standstill, will trouble existing clients. Unhappy clients, limited flexibility or agility, and a lack of innovation will make business people’s lives harder in our fast-moving context. Unhappy business, difficulty in debugging & troubleshooting, slow and cumbersome releases, increased technical debt, and difficulty in attracting talent will reduce the morale of the development team.

In the ideal world, these frictions are addressed early and often with small evolutions as soon as a friction is spotted. But a common reality is that the frictions build up because there is no time, no budget, no experience, … to install and persevere the incremental changes.

In the latter scenario, the frictions build up to such an extent that we can speak about a dysfunctional monolith. Addressing these frictions will require a longer and more expensive change track. Which could, when done right, lay the foundation for a more incremental evolutionary architecture.

The remainder of this article will focus on the situation where a big change is needed.

Getting Ready

Any change track will heavily benefit from (i) a good understanding of where you are now, (ii) a rough idea about the destination and destination, and (iii) a commitment from all involved parties to travel the (sometimes bumpy) roads.

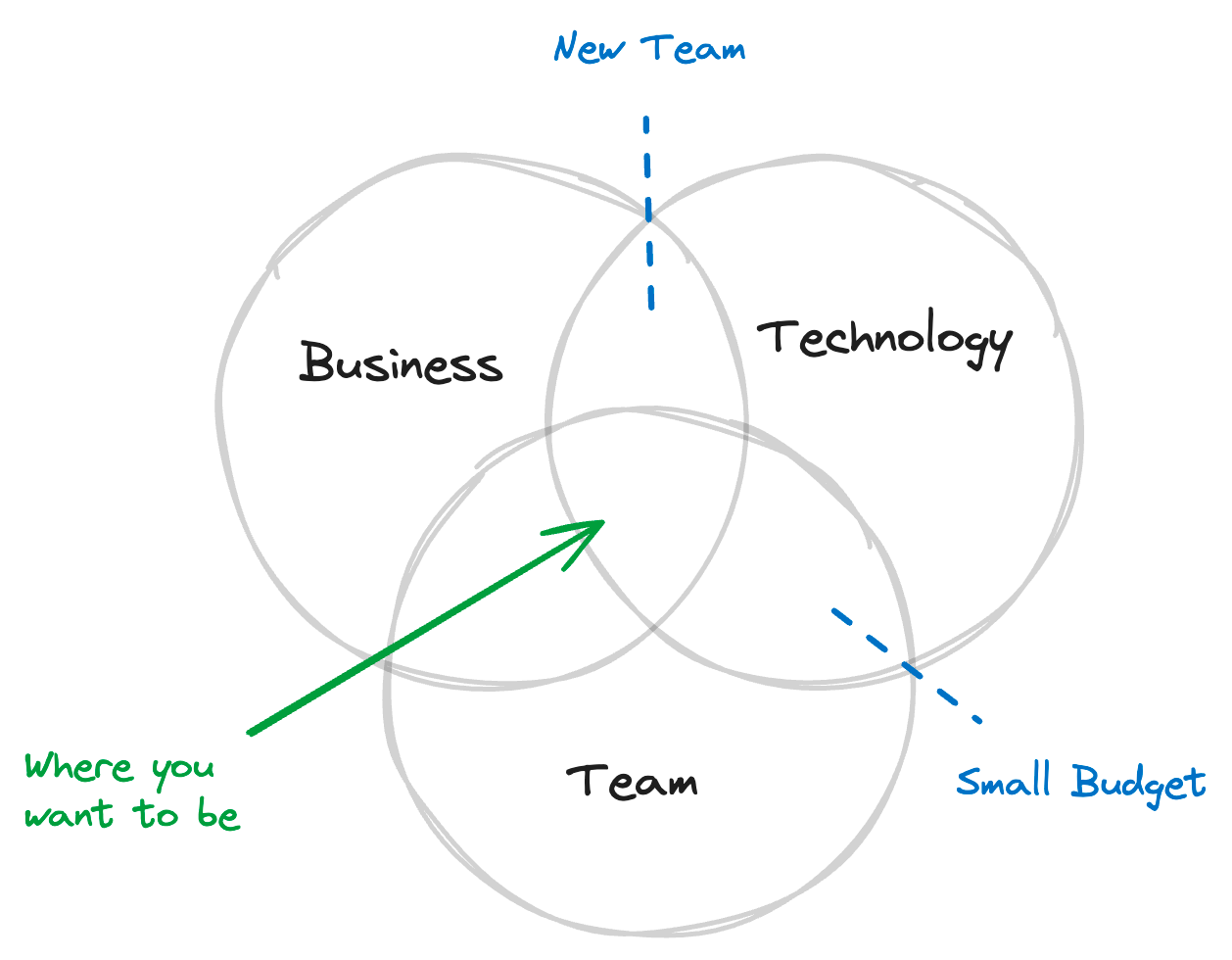

In the context of a dysfunctional monolith, 3 parties are involved:

- The Business, that needs to maximize the business value with scarce funding

- Technology, that works with bits & bytes, which leaves no room for negotiation: it works or not

- The development team, for whom there is always more work than time

Technology must be part of the exercise, there would not be a lot of change to the monolith otherwise 😀

In terms of business & team involvement, there are options when either party is not on board with the change:

- If business is not onboard, there will be no budget freed up for the change. This does not mean nothing can happen: the dev team can initiate small, incremental steps to improve. These improvements can be used to build a case for freeing up more time & budget to support a more profound upgrade.

- A new team may be installed. If the application is no longer of strategic importance to the organization, it makes sense to consider giving the application to an external provider. In all other cases, this is a high-risk scenario, that would only be viable when the technology (and possibly the current team), is so dysfunctional that a gradual evolution is doomed to fail.

Having a good alignment across all 3 stakeholders is however what you want to aim for:

- You want the mandate from the business to spend sufficient budget & time to make a significant improvement - it does not need to be in the waterfall mindset, with resources allocated for the entire migration (the estimates will be wrong anyway), but should at least be sufficient to create a safe space for learning, experimenting, and yielding a first set of results. Based on the (lack of) positive impact on the business itself, a new budget round can be defined.

- The buy-in from the team to onboard the journey - a journey that will require them to change, will have several bumps, and will be more complex than working on a bug or feature in a stable app. Indeed, 2 tracks need to be balanced to address the existing frictions while keeping the “old” situation business viable.

- The technology choices need to be aligned with the current situation and the TO-BE situation. Being too ambitious will lead to castles in the air of half-finished work. Thinking too small will lead to marginal improvements, but will not prepare for the next wave of success. All that in a context of unforgiving binary computers: it works or not 😄

This list (of common myths and guiding questions) helps you get a good understanding of your current situation. You can use this as inspiration to be better prepared to start the migration journey.

Setting the scene with a simple and very common architecture

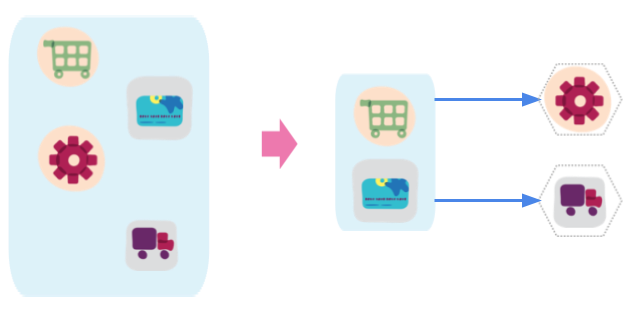

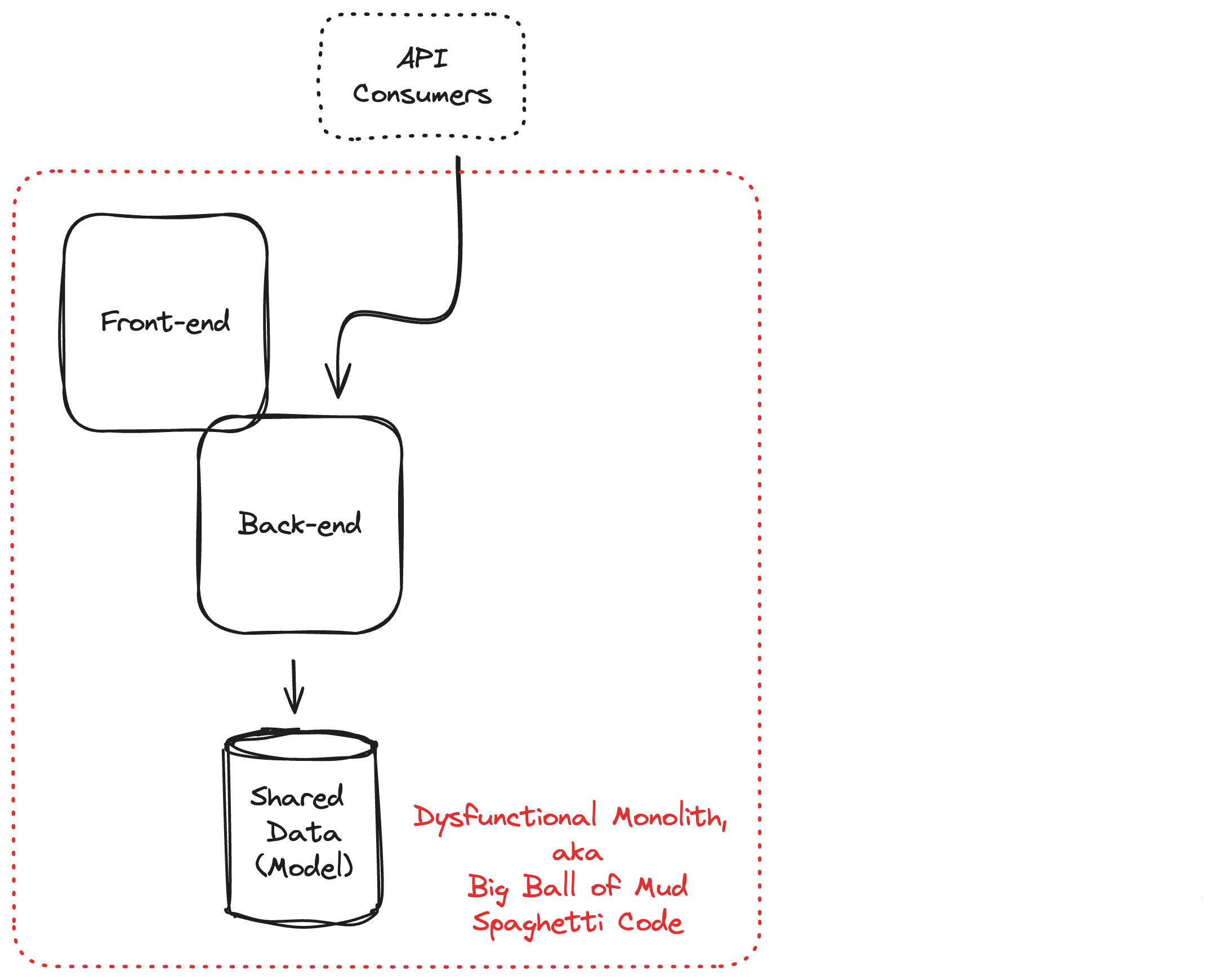

Assume we have the following sample architecture that is representative of many applications out there.

An in-house developed front-end talks to the (dysfunctional) monolith through a synchronous API (eg. JSON over HTTP). External API consumers talk to a subset of the API, also in synchronous mode. The entire monolith works against a single data set with a data model for the entire codebase.

The simplicity of this architecture has huge advantages, each of them coming with a pitfall…

- Any exposed API (internal or external) can use all internal functionality, ideal for initial velocity and adhering to the DRY principle. But it comes with the pitfall of ending up with an ever-growing collection of dependencies, most likely in all directions (aka spaghetti code or big ball or mud);

- Any internal functionality can use all data, which is immediately up to date. Here as well, this is ideal for initial velocity and very convenient for developing functions with broad data needs. The pitfall is the growing number of complex queries, data joins, and transactions across multiple areas of your data model. Making it harder and harder to keep performance good and evolutions easy…

As mentioned above, the benefits may outweigh the inconveniences for a long time. Only growing success (leading to a growing application and more usage) will push the limits of this setup and cause friction in terms of business, technology, and team functioning (or all of the above). When the friction becomes too big to bear, it is time for change.

Thinking in Modules

Systems should be built from cohesive, loosely coupled components (modules)

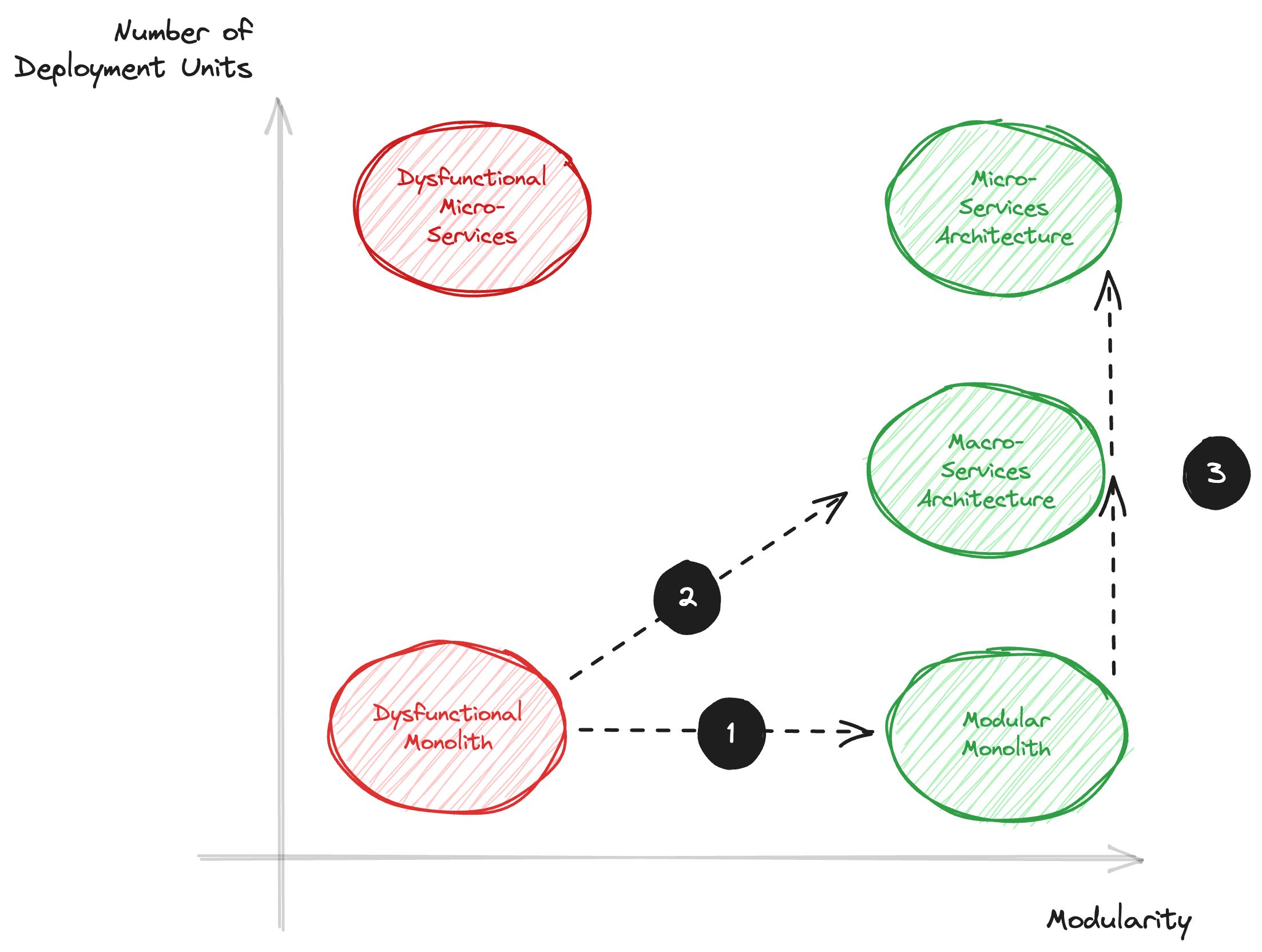

A few years ago, migrating from monolith to microservices was all the rage, promising a very flexible and powerful modular architecture. This is however only one side of the coin… the flip side being the intrinsic complexity of distributed systems. Today, there is a much more nuanced view on addressing the dysfunctions of a monolith: modular monoliths are part of the solution space.

But what are modules?

Martin Fowler provides an interesting definition:

modules [ … are ] a division of a software system that allow us to modify a system by only understanding some well-defined subsets of it - modules being those well-defined subsets.

Various properties of good modules have been identified and proposed: independence, encapsulation, reusability, exchangeability, and having an interface, …

No matter which definition you use or which implementation techniques you use, the goals of splitting your software into several modules are (i) to reduce the cognitive load of working on them and (ii) to make it easier to make (safe) changes. The underlying mantra is to make the unit of change small(er) allowing for easier evolutions. This is needed to address the business needs of agility in a cost-efficient way, to provide the development team(s) with a productive environment that is fun to work in, and to allow you to keep your codebase up to date with its dependencies as time passes by.

Indeed, the level of modularity is the best predictor for the ease of evolution of an application, preparing you for changes in functional and non-functional requirements.

High-level Migration Strategies

With the knowledge gathered above, we can now identify several viable migration strategies (towards more modularity). We also know where we do NOT want to end up: transforming a dysfunctional "big ball of mud" monolith into a distributed monolith will not make anyone happy!

In Part 2, I will explore these migration strategies and assess when each of them makes the most sense.

Later on, I will dive into the technical tactics and patterns for a successful migration (part 3) and a day in the life of a team working on a monolith migration (part 4)

References